Are you one of those who followed the trend of editing photos in the style of ‘Ghibli’ last Eid?

The trend has now spread to other visual styles, such as The Muppets , Pixar , and action heroes .

Aesthetic images created by artificial intelligence (AI) are certainly appealing. However, behind the stunning visuals, there is the potential for copyright infringement and misuse of personal data.

Are you one of those who followed the trend of editing photos in the style of ‘Ghibli’ last Eid?

The trend has now spread to other visual styles, such as The Muppets , Pixar , and action heroes .

Aesthetic images created by artificial intelligence (AI) are certainly appealing. However, behind the stunning visuals, there is the potential for copyright infringement and misuse of personal data.

The ‘grey’ processes behind AI image generation

Many users are often unaware that AI systems are trained to use data from all over the internet . As a result, copyrighted works can be dragged into it without permission.

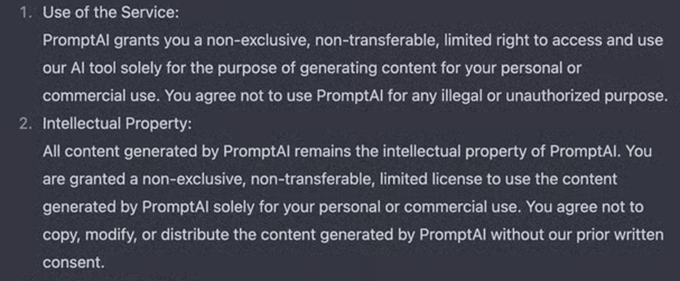

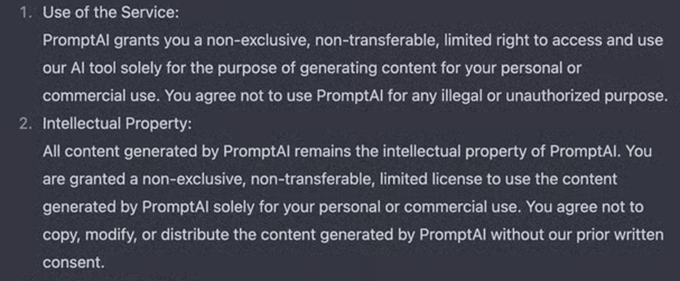

In addition, the AI system is also trained to collect data from user input with the permissions granted by the user when they first register.

Screenshot of OpenAI’s terms and conditions. Author provided.

How AI can ‘violate’ copyright and privacy

AI has the potential to infringe copyright at least on two levels.

First , when training, AI systems typically require copies of large amounts of data—including copyrighted data—to build their models.

AI is trained through two main methods : web scraping (taking data from public sites like Wikipedia and GitHub) and data licensing (through formal contracts with content owners like book publishers or encyclopedia platforms).

The first method is more commonly used by AI developers. However, this method is prone to legal violations—especially if the data collected contains copyrighted works.

Second , once the AI model has been trained, potential copyright infringement can also occur at the production stage, namely when the AI produces new works that imitate distinctive elements of the original work —for example, painting style, color, or character proportions.

Legally , duplicating or adapting copyrighted work without the owner’s permission is potentially a copyright infringement, unless there is a license or exception to this. Even if the resulting image is “different”—but displays characteristics of the original work—it can still be considered a violation.

Another issue that is often overlooked is the risk of privacy violations. User interactions with ChatGPT—both text and images—can be stored and used to train the system , unless the user disables usage data sharing .

OpenAI’s privacy policy states that user data will be retained for as long as possible if chat history is enabled. However, if disabled, OpenAI will retain user data for 30 days.

This leaves users in a vulnerable position, especially if the information shared is sensitive or personal.

OpenAI does provide privacy settings , but user awareness in Indonesia is relatively low . Many do not yet understand that every input can be part of the next AI dataset .

The fate of AI regulations in Indonesia

OpenAI argues that the images they produce are the result of ‘ transformative use ‘ , not a direct copy.

In United States (US) law, this reason is protected by the doctrine of fair use . This means that the use of copyrighted material is allowed as long as the new work is different enough to add value.

However, in the context of Indonesian law, the transformative use reason used by OpenAI is less appropriate.

This is because the Copyright Law does not specifically regulate works produced by AI , especially the use of copyrighted works to train AI systems.

The Copyright Act defines a creator as a “natural person or legal entity”, making it difficult to include AI in that definition .

In addition, the Copyright Act is also very limited in accommodating the concept of “ transformative use .” This means that copyrighted material can only be used without the owner’s permission for educational, research, security, and non-commercial purposes .

In general, the use of AI in Indonesia can be included in the provisions of the Electronic Information and Transactions Law (ITE) and the Personal Data Protection Law (PDP) .

However, the ITE Law uses the term “electronic agent” which is still being debated regarding its scope for AI systems. This is because, with the category of “electronic agent”, all legal obligations are imposed on the provider of AI-based devices.

Because it is only considered a tool or intermediary, AI is not a party that can be held directly responsible . This means that if there is a violation, the legal process refers to general regulations such as consumer protection or civil/criminal liability by the system manager.

As data controllers, service providers like OpenAI are required to comply with the Personal Data Protection Act , which regulates the management and processing of personal data. However, it is unclear how this regulation will address the problems that AI may pose.

Need to catch up

Regulations in Indonesia should be able to adapt to the latest AI developments. China, for example, has issued the New Generative AI Development Plan as the main foundation for governance of various types of AI since 2017.

The latest discourse regarding the formation of AI regulations in Indonesia is contained in the National Strategy for Artificial Intelligence (Stranas KA) which was prepared by representatives of experts under the supervision of the Agency for the Assessment and Application of Technology (BPPT).

However, until now there has been no specific draft regulation aimed at regulating AI systems, especially regarding copyright and works produced by AI. Most attention is still focused on AI output .

The government and policymakers in Indonesia must immediately create a framework to regulate the use of AI. This is not only for legal protection, but also for the sake of justice for artists, writers, and individuals whose data is used without consent.

As users, we should also be more careful and avoid sharing sensitive or personal information when using AI systems. We need to understand the data settings so we can determine whether we want our interactions to be stored or deleted after use.

Author Bio: Haekal Al Asyari is a Lecturer, Universitas Gadjah Mada and Ph.D. Candidat at the University of Debrecen

Are you one of those who followed the trend of editing photos in the style of ‘Ghibli’ last Eid?

The trend has now spread to other visual styles, such as The Muppets , Pixar , and action heroes .

Aesthetic images created by artificial intelligence (AI) are certainly appealing. However, behind the stunning visuals, there is the potential for copyright infringement and misuse of personal data.

Are you one of those who followed the trend of editing photos in the style of ‘Ghibli’ last Eid?

The trend has now spread to other visual styles, such as The Muppets , Pixar , and action heroes .

Aesthetic images created by artificial intelligence (AI) are certainly appealing. However, behind the stunning visuals, there is the potential for copyright infringement and misuse of personal data.